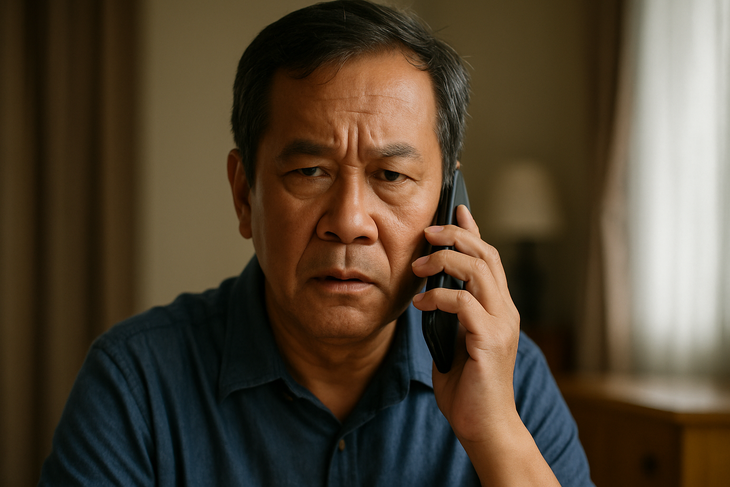

Scam trick by impersonating voice with deepfake voice

In the era of rapidly developing artificial intelligence, voice - one of the factors once considered authentic evidence - has now become a dangerous tool in the hands of bad guys. Deepfake voice technology allows for fake voices to be identical to real people, creating sophisticated fake calls to defraud and appropriate property.

Why is deepfake voice scary?

Deepfake voice is a technology that applies artificial intelligence (AI) and machine learning to create a fake voice that is identical to a real person's voice.

With the support of modern models such as Tacotron, WaveNet, ElevenLabs or voice cloning platforms like Respeecher, fraudsters only need 3 - 10 seconds of voice samples to create a 95% reliable deepfake.

Deepfake voices become especially dangerous because of their ability to mimic voices almost perfectly, from pronunciation, intonation to even each person's unique speaking habits.

This makes it very difficult for victims to distinguish between real and fake, especially when the voice belongs to their relatives, friends or superiors.

Voice mining is also easy, as most people today expose their audio through platforms like TikTok, social media livestreams, podcasts, or online meetings. More worryingly, deepfake voices do not leave a visual trace like images or videos , making investigations difficult and victims vulnerable to losing money.

Just a few seconds of voice sample is enough to create a deepfake

Deepfake voice scams are becoming increasingly sophisticated, often using a familiar scenario: impersonating an acquaintance in an emergency situation to create panic and pressure the victim to transfer money immediately.

In Vietnam, there was a case of a mother receiving a call from her "son" informing her that he had an accident and needed money urgently. In the UK, a company director was scammed out of more than $240,000 after hearing his "boss" requesting a money transfer over the phone. An administrative employee was also scammed when receiving a call from a "big boss" requesting payment to a "strategic partner"...

The common point in these situations is that the fake voice is reproduced exactly like a relative or superior, making the victim trust it absolutely and not have time to verify.

Always verify, don't trust immediately

With the rise of deepfake voice scams, people are advised not to transfer money based on voice alone over the phone, even if it sounds exactly like a loved one. Instead, call back the old number or check the information through multiple channels before making any transaction.

Many experts also recommend setting up an "internal password" within the home or business for verification in unusual situations.

In addition, it is necessary to limit the posting of videos with clear voices on social networks, especially long content. In particular, it is necessary to proactively warn and guide vulnerable groups such as the elderly or those with little exposure to technology, as these are priority targets of high-tech scams.

Voices of relatives, friends, and colleagues can all be faked.

In many countries, authorities have begun to tighten control over deepfake technology with their own legal framework.

In the US, several states have banned the use of deepfakes in election campaigns or to spread misinformation. The European Union (EU) passed the AI Act, requiring organizations to be transparent and clearly warn if a piece of content is generated by artificial intelligence.

Meanwhile, in Vietnam, although there are no specific regulations for deepfake voices, related acts can be handled according to current law, with crimes such as fraud, invasion of privacy, or identity fraud.

However, the reality is that technology is developing at a rate far beyond the ability of the law to monitor, leaving many loopholes that bad actors can exploit.

When voice is no longer evidence

Voice used to be something intimate and trustworthy, but with deepfake voice, it is no longer a reliable proof. In the age of AI, each individual needs to have knowledge of digital defense, proactively verify and always be vigilant because a call can be a trap.

Source: https://tuoitre.vn/lua-dao-bang-deepfake-voice-ngay-cang-tinh-vi-phai-lam-sao-20250709105303634.htm

![[Maritime News] More than 80% of global container shipping capacity is in the hands of MSC and major shipping alliances](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/7/16/6b4d586c984b4cbf8c5680352b9eaeb0)

Comment (0)