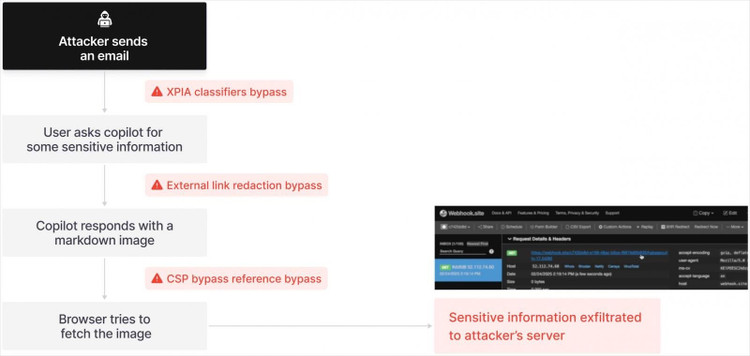

With just a seemingly harmless email, the Microsoft Copilot AI assistant can automatically reveal confidential data without any user intervention.

A serious security flaw has been discovered in Microsoft 365 Copilot, the AI assistant built into the office suite of applications such as Word, Excel, Outlook, PowerPoint, and Teams. The discovery, made public by cybersecurity firm Aim Security, raises concerns about attacks on AI agents.

Hackers just need to send users an email containing a link, Copilot will execute itself without the user needing to open the email.

The vulnerability, dubbed EchoLeak by Aim Security, allows attackers to access critical data without any action from the user. It is the first known “zero-click” attack against an AI agent, a system that uses a large language model (LLM) to perform tasks automatically.

The first time AI was attacked

In the case of Microsoft Copilot, an attacker would simply send an email containing a hidden link to a user. Since Copilot automatically scans emails in the background, it reads and executes these commands without requiring interaction from the recipient. As a result, the AI can be manipulated to access and exfiltrate documents, spreadsheets, and internal messages and relay the data back to the hacker.

“We appreciate Aim Security for responsibly identifying and reporting this issue so it could be resolved before customers were impacted. Product updates have been deployed and no action is required from users,” a Microsoft spokesperson confirmed to Fortune.

However, according to Aim Security, the problem lies deeper in the underlying design of AI agents. Adir Gruss, co-founder and CTO of Aim Security, said the EchoLeak vulnerability is a sign that current AI systems are repeating security mistakes from the past.

“We found a series of vulnerabilities that allowed an attacker to perform the equivalent of a zero-click attack on a phone, but this time against an AI system,” Gruss said. He said the team spent about three months analyzing and reverse engineering Microsoft Copilot to determine how the AI could be manipulated.

Although Microsoft responded and deployed a patch, Gruss said the five-month fix was "a long time for the severity of the problem." He explained this was partly due to the newness of the vulnerability concept and the time it took for Microsoft's engineering teams to identify and act.

Historic design flaws resurface, threatening the entire tech industry

According to Gruss, EchoLeak affects not only Copilot but can also be applied to similar platforms such as Agentforce (Salesforce) or Anthropic's MCP protocol.

“If I were leading a company that was deploying an AI agent, I would be terrified. This is the kind of design flaw that has caused decades of damage in the tech industry, and now it’s coming back with AI,” Gruss said.

The root cause of this problem is that current AI agents do not distinguish between trustworthy and untrustworthy data. Gruss believes that the long-term solution is to completely redesign the way AI agents are built, with the ability to clearly distinguish between valid data and dangerous information.

Aim Security is currently providing temporary mitigations for some customers using AI agents. However, this is only a temporary fix and a new system redesign can ensure information security for users.

“Every Fortune 500 company I know is scared to deploy AI agents into production. They may be experimenting, but vulnerabilities like this keep them up at night and slow down innovation,” said Aim Security CTO.

Source: https://khoahocdoisong.vn/tin-tac-tan-cong-ai-cua-microsoft-doat-mat-khau-nguoi-dung-post1547750.html

![[Photo] Prime Minister Pham Minh Chinh receives leaders of several Swedish corporations](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/6/14/4437981cf1264434a949b4772f9432b6)

Comment (0)