“I can’t understand it,” said Andrew Wee, who has 30 years of experience in the data center and hardware industry in Silicon Valley.

What puzzled him, even angered him, was the projected energy demands of future AI supercomputers, the machines that were supposed to fuel humanity's great leap forward.

Wee, who has held senior positions at Apple and Meta and is now head of hardware for cloud provider Cloudflare, believes the current growth in energy needed for AI—which the World Economic Forum estimates will grow 50% per year through 2030—is unsustainable.

“We need to find technical solutions, policy solutions and other solutions to collectively address this,” Wee said.

A new path for AI chips

To that end, Wee’s team at Cloudflare is testing a completely new type of chip, from a startup founded in 2023 called Positron, which just announced a new $51.6 million investment round.

These chips have the potential to be much more energy efficient than chips from Nvidia, the industry leader in inference tasks.

This is the process of generating AI responses from user requests. While Nvidia chips will continue to be used to train AI for the foreseeable future, more efficient inference could save companies tens of billions of dollars, and a corresponding amount of energy.

According to the WSJ , there are at least a dozen chip startups competing to sell cloud computing providers custom-built inference chips of the future.

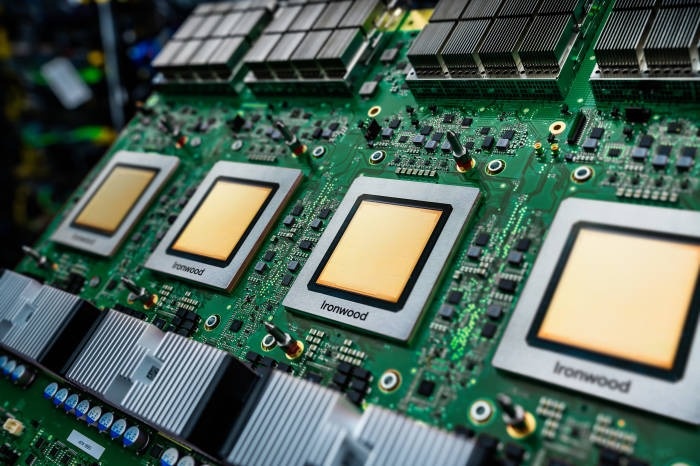

|

The Ironwood chip was developed by Google specifically for inference tasks. Photo: Google. |

Google, Amazon, and Microsoft are also in the game, building inference-focused chips to power their own internal AI tools, and to sell to partners through cloud services.

To achieve their goals, makers of novel AI chips are using a “classic” strategy: Redesigning chips from scratch, specifically for the new class of tasks that are suddenly becoming important in computing.

That was the formula for graphics cards in the past, and that's how Nvidia built its current success. It took a while for graphics chips to be repurposed for AI, but the reality is that it's never been a perfect fit.

"Bottleneck"

Jonathan Ross, who used to head Google's AI chip development program, said he founded a startup called Groq because he believed there was a completely different way to design chips, optimized only to run powerful AI models.

Groq claims its chip can also deliver AI power much faster than Nvidia's best chip, and crucially, at just one-third the power.

This is due to its unique design, with memory embedded inside, rather than separate, and it makes sense that the startup could deliver inference tasks at a lower cost than Nvidia’s systems, according to Jordan Nanos, an analyst at SemiAnalysis.

Meanwhile, Positron is taking a different approach to delivering faster inference. The startup, which is partnering with Cloudflare, has created a simplified chip with a narrower range of capabilities, aimed solely at performing tasks faster.

Positron’s next-generation system is expected to compete directly with Nvidia’s next-generation chip, called Vera Rubin. Based on Nvidia’s roadmap, Positron’s chip will be 2-3 times more efficient, while delivering 3-6 times more power per unit of input, according to Mitesh Agrawal, CEO of Positron.

|

Positron's new generation chip is simplified with a narrower range of capabilities, aimed only at performing tasks faster. Photo: Positron. |

It's a truism in computing history that whenever hardware engineers figure out how to do something faster or more efficiently, programmers, and consumers, figure out how to use all the new performance gains.

Mark Lohmeyer, vice president of AI and computing infrastructure at Google Cloud, said that as consumers and businesses adopt new, more demanding AI models, it means that no matter how much more efficient his team can deliver AI, there's no end to the demand for it.

Like most other major AI vendors, Google is working to find radical new ways to generate energy to power systems, including nuclear and fusion power.

While new chips may help individual companies deliver AI more efficiently, the industry as a whole is still on track to consume more and more energy. As a recent report from Anthropic notes, that means energy production, not data centers and chips, could be the real bottleneck for future AI development.

Source: https://znews.vn/chia-khoa-cho-van-de-cua-ai-post1572212.html

![[Photo] General Secretary To Lam attends the 80th anniversary of Vietnam's diplomacy](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/8/25/3dc715efdbf74937b6fe8072bac5cb30)

Comment (0)