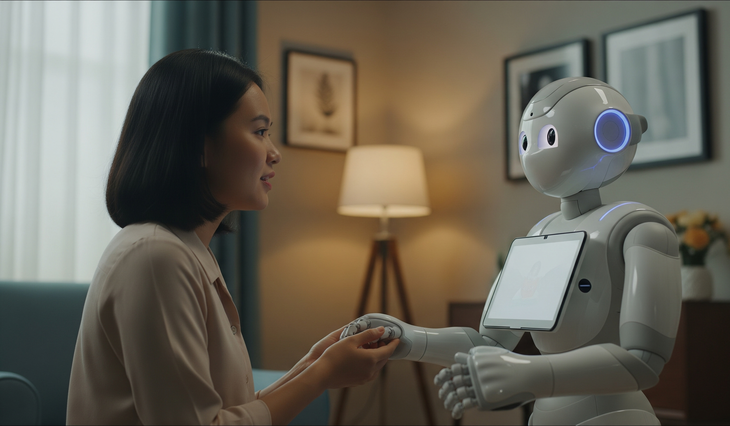

Image depicting a person confiding in a psychotherapy robot

Therapy used to be a journey that required a person to listen attentively and emotionally. But with the development of artificial intelligence and natural language processing technology, a new generation of therapy robots is emerging.

No need for an office visit, no real doctor, just a phone or tablet to start a “chat” with a robot. But this also raises the question: Can the feedback from machines really be soothing?

Psychotherapy robots are getting smarter

The explosion of large language models (LLMs) such as GPT, Claude or Gemini has enabled therapy robots to communicate in natural language, giving coherent and empathetic responses. Start-ups such as Wysa, Woebot or Replika have developed AI-powered chat apps that can recognize emotions in text and adjust their responses to suit the user’s mood.

Behind seemingly simple conversations lies a complex language processing system that combines machine learning and sentiment analysis. AI models are trained on millions of anonymized conversations, along with behavioral psychology frameworks.

When a user sends a message like “I feel tired and hopeless,” the system not only responds with comforting words, but can also recognize signs of emotional distress and suggest cognitive adjustment practices.

In addition to text processing, some systems also integrate AI that analyzes speech to recognize stress levels through speaking speed, intonation, or frequency of silence. From there, robots can "recognize" subtle changes in emotions even if the user does not say it.

Responses are also becoming more natural, no longer stereotyped like old chatbots, thanks to models that continuously learn from previous interaction behavior.

Cloud computing technology keeps all data synchronized, so whether you’re using a phone or a computer, the chat experience is seamless. AI doesn’t just rely on what the user says, it also learns from their chat history, interaction time, frequency of emotional keywords, etc. to tailor their response style to each individual . That’s why many people feel like their therapy robot actually “gets to know” them over time.

When the machine can listen, but not necessarily understand

According to Tuoi Tre Online 's research, no matter how well AI can analyze language, there is still a gap between understanding semantics and feeling emotions . A robot can respond with standard words of encouragement, but lacks the warmth that comes from human compassion.

In cases of severe mental crisis, robots cannot replace timely response, especially when specific action, intervention or emergency support is needed.

Furthermore, AI systems are still dependent on the data they are fed. If the training data set lacks diversity in culture, local language, or expression, the robot's responses may feel "cold" or biased in a particular context.

Some apps also run into limitations when users express emotions indirectly or use metaphors — something that's common in psychology conversations.

In addition, privacy concerns cannot be ignored in today's digital age. As psychological data is a sensitive type of information , if not encrypted and tightly controlled, it can become a serious risk of exposure. As technology becomes increasingly personalized, sharing emotions with a machine system must be done with an understanding of the risks involved.

There’s no denying that technology has made mental health care more accessible than ever. AI and therapeutic robots can serve as early companions, providing temporary relief from difficult emotions. But putting your full trust in a machine still requires caution.

By understanding the limits of technology, users will know how to exploit its benefits without dependence, so that they can still control their emotions - rather than be led by smart lines of code.

Source: https://tuoitre.vn/robot-tri-lieu-tam-ly-co-thau-hieu-hay-chi-biet-lang-nghe-20250618102426124.htm

Comment (0)