|

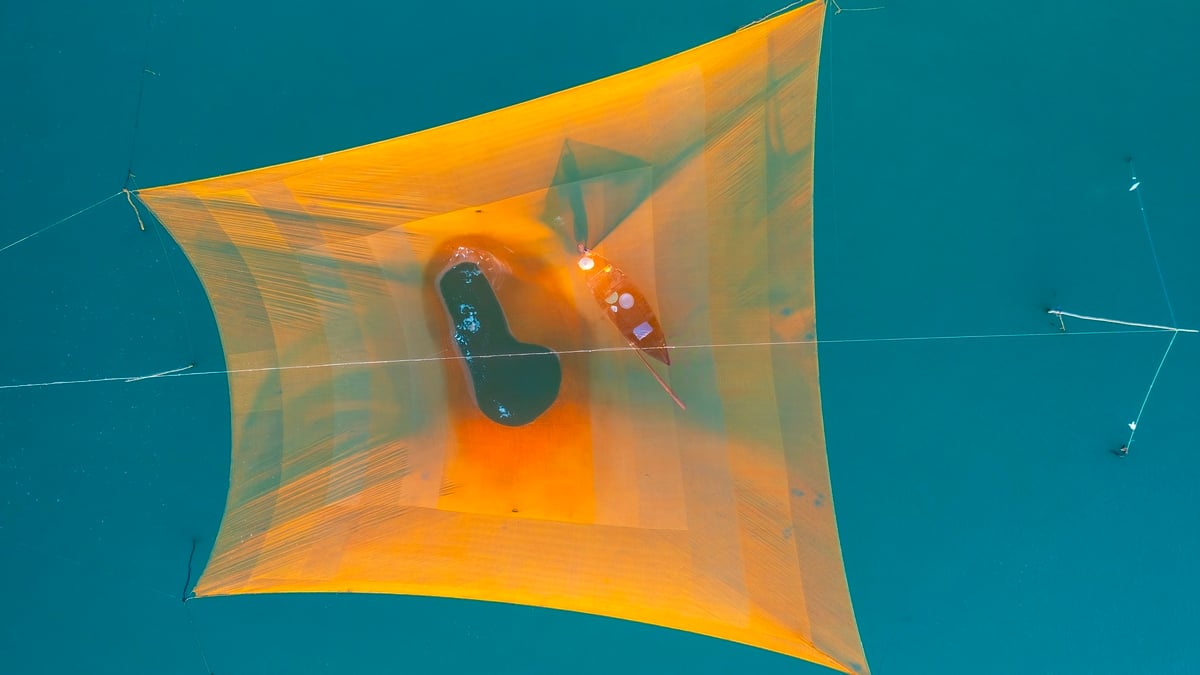

OpenAI has just launched GPT-OSS, the company's first open-weight AI model since 2018. The highlight is that the model is released for free, users can download, customize and deploy on regular computers. Photo: OpenAI . |

|

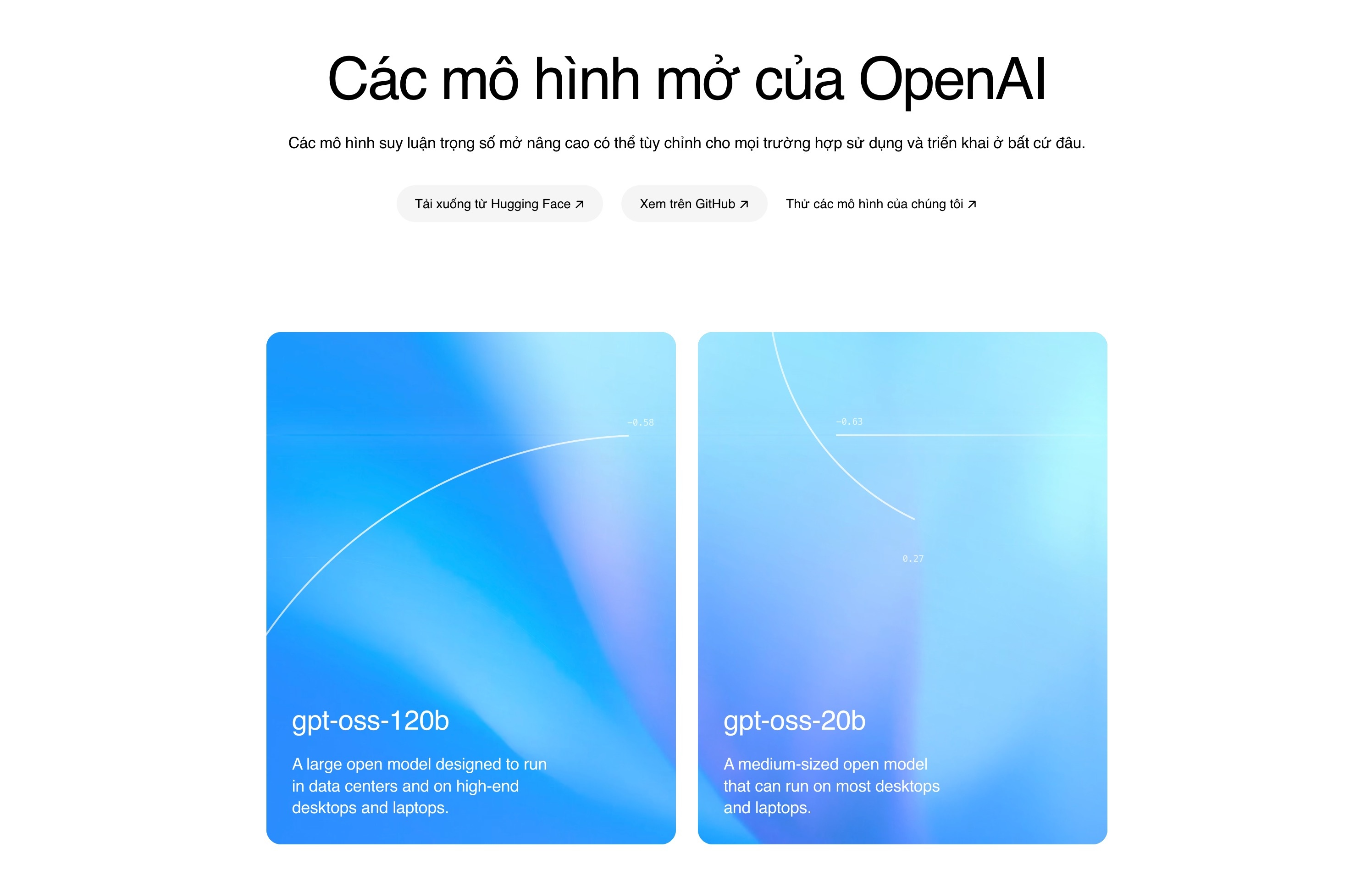

GPT-OSS comes in two versions: one with 20 billion parameters (GPT-OSS-20b), which can run on computers with as little as 16 GB of RAM. Meanwhile, the 120 billion parameter version (GPT-OSS-120b) can run on an Nvidia GPU with 80 GB of memory. According to OpenAI, the 120 billion parameter version is equivalent to the o4-mini model, while the 20 billion parameter version performs like the o3-mini model. |

|

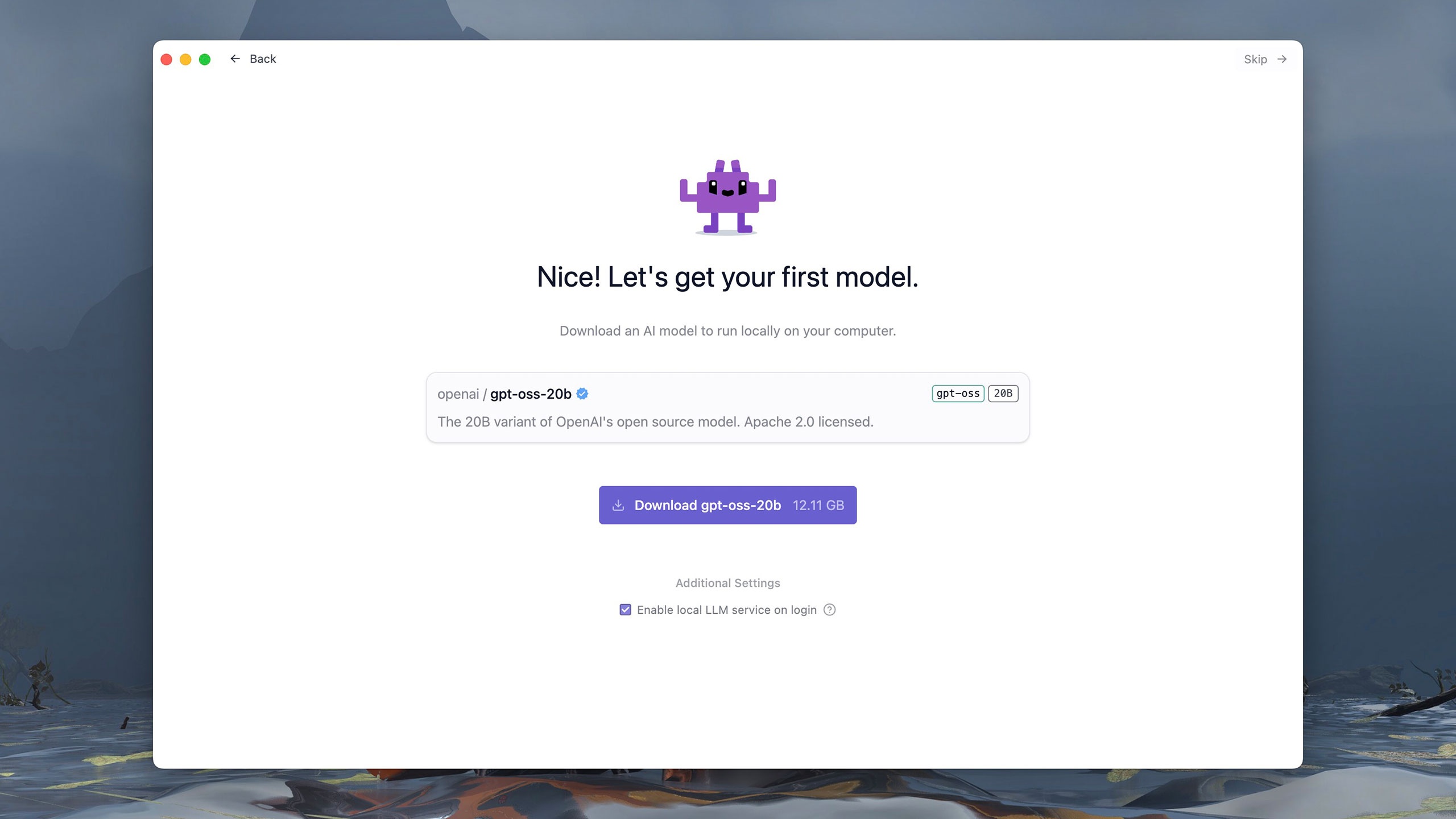

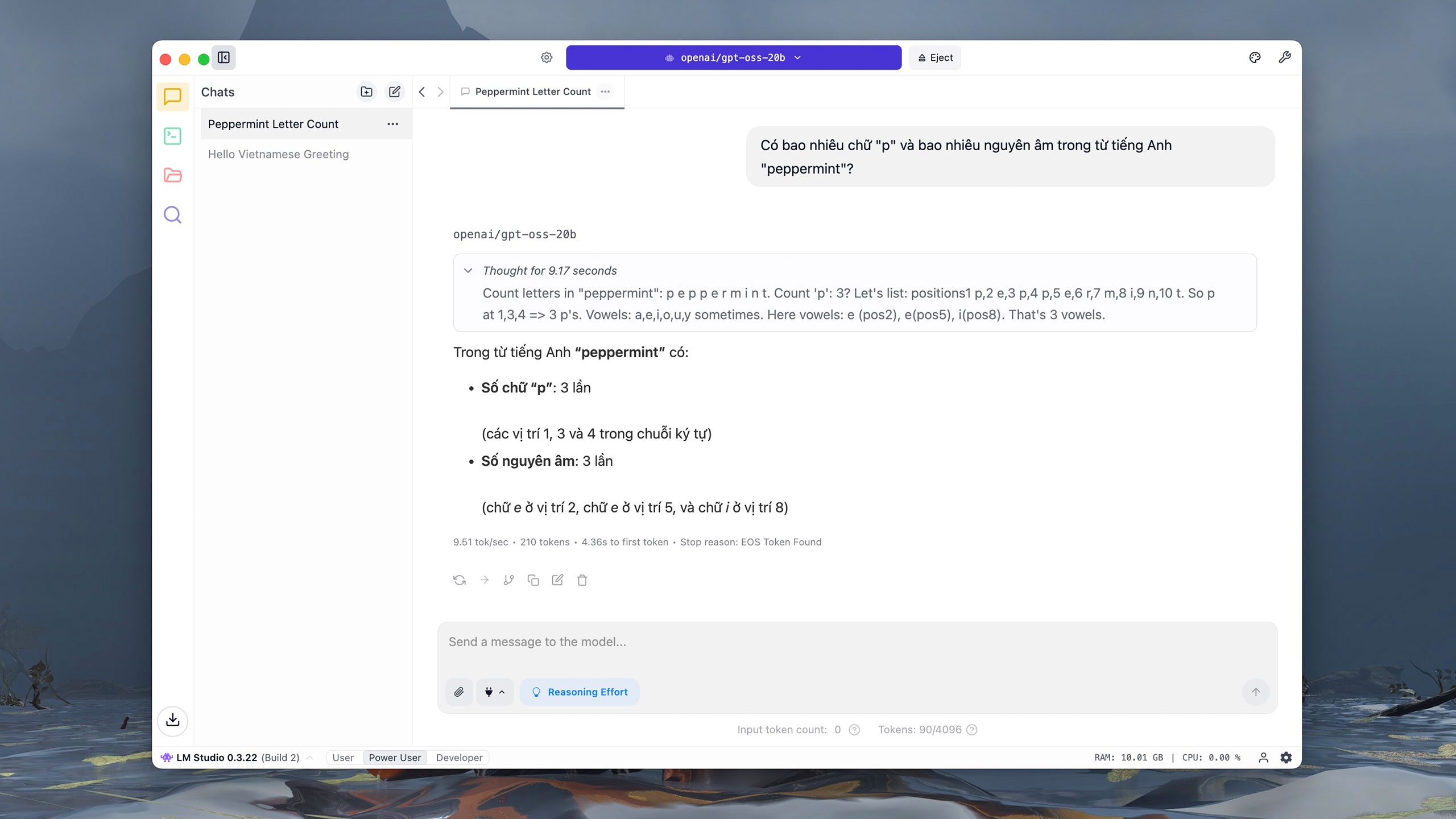

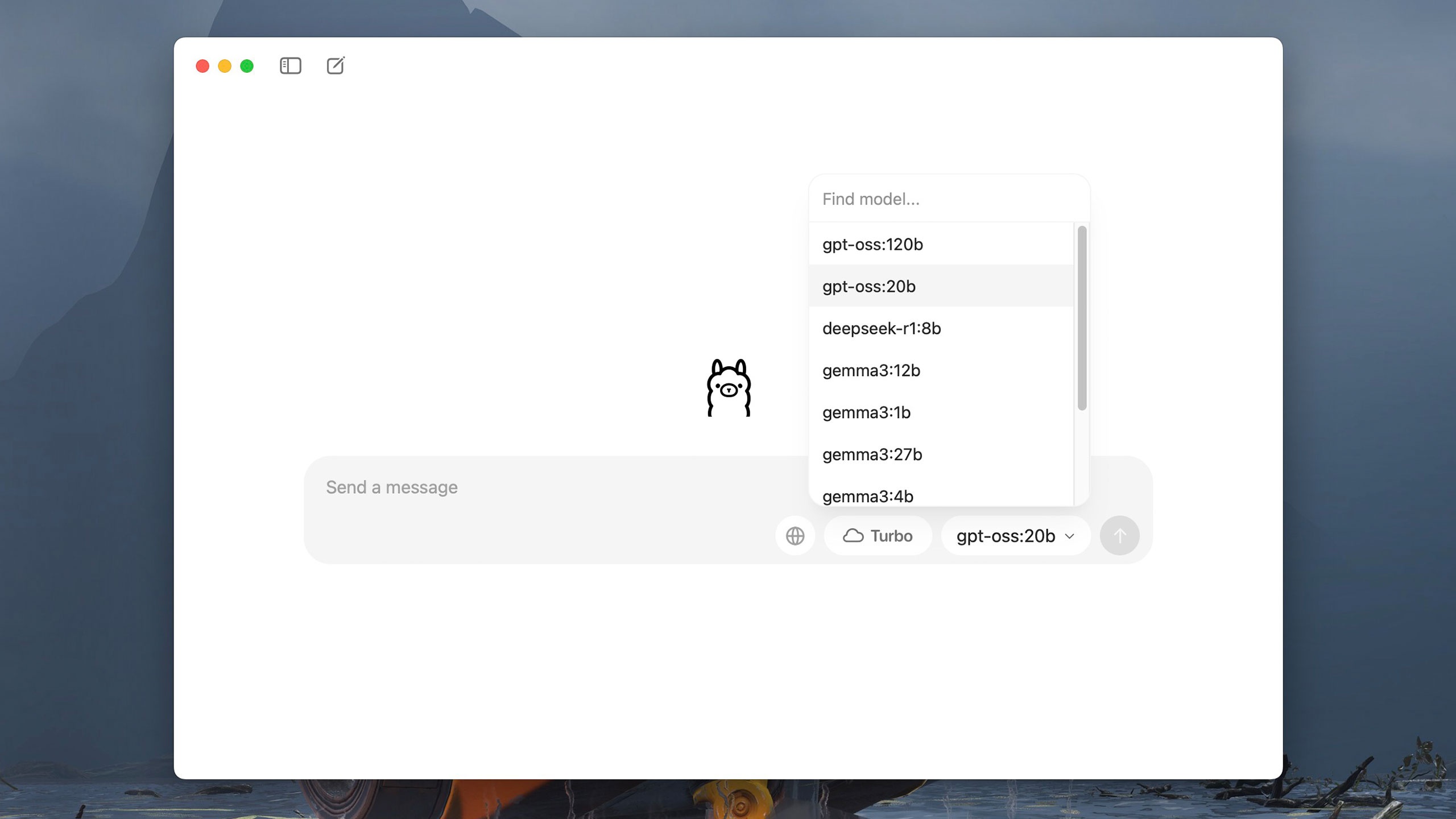

Versions of GPT-OSS are distributed through several platforms such as Hugging Face, Azure or AWS under the Apache 2.0 license. Users can download and run the model on their computers using tools such as LM Studio or Ollama. These software are released for free with a simple, easy-to-use interface. For example, LM Studio allows GPT-OSS to be selected and loaded right from the first run. |

|

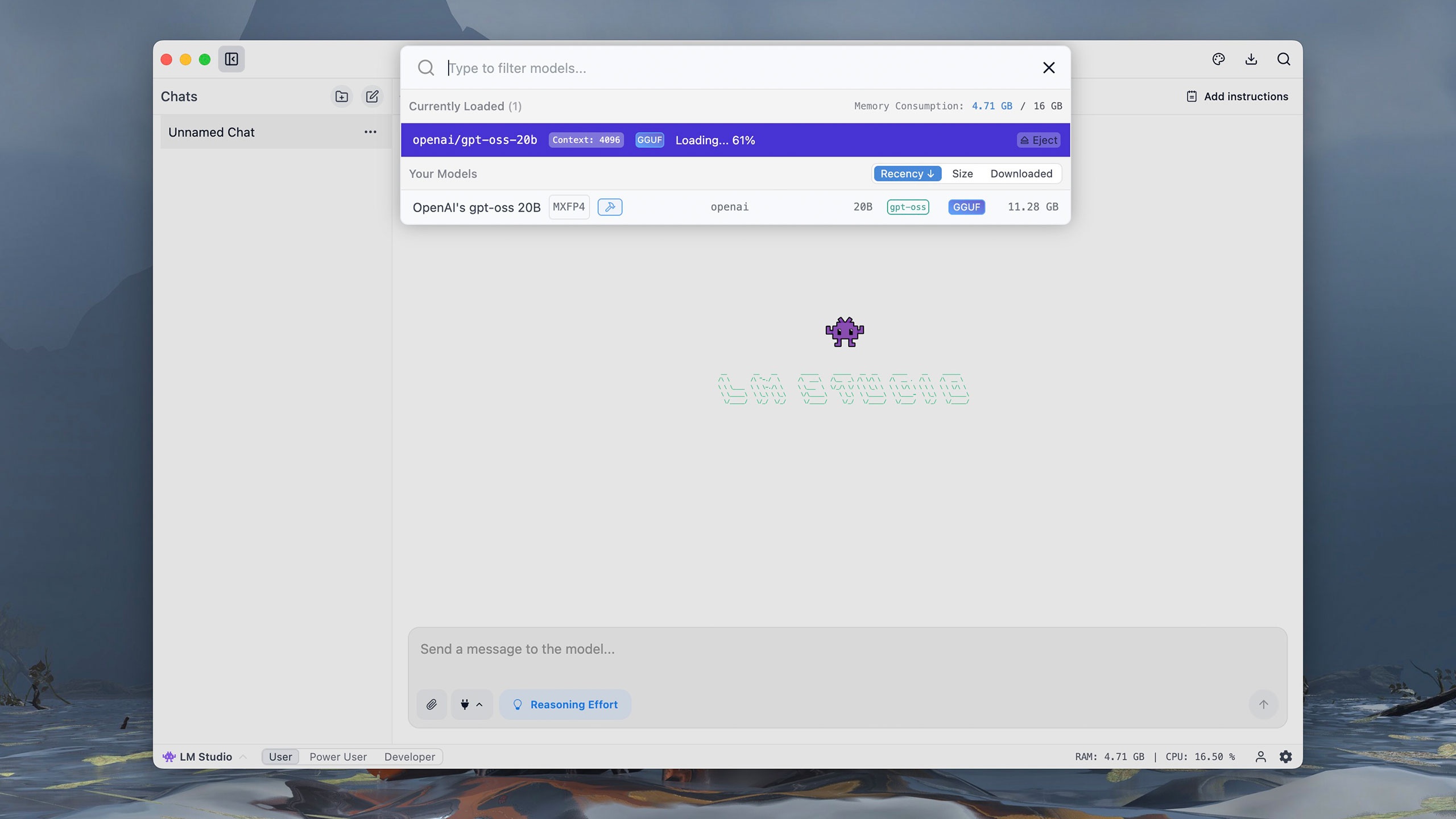

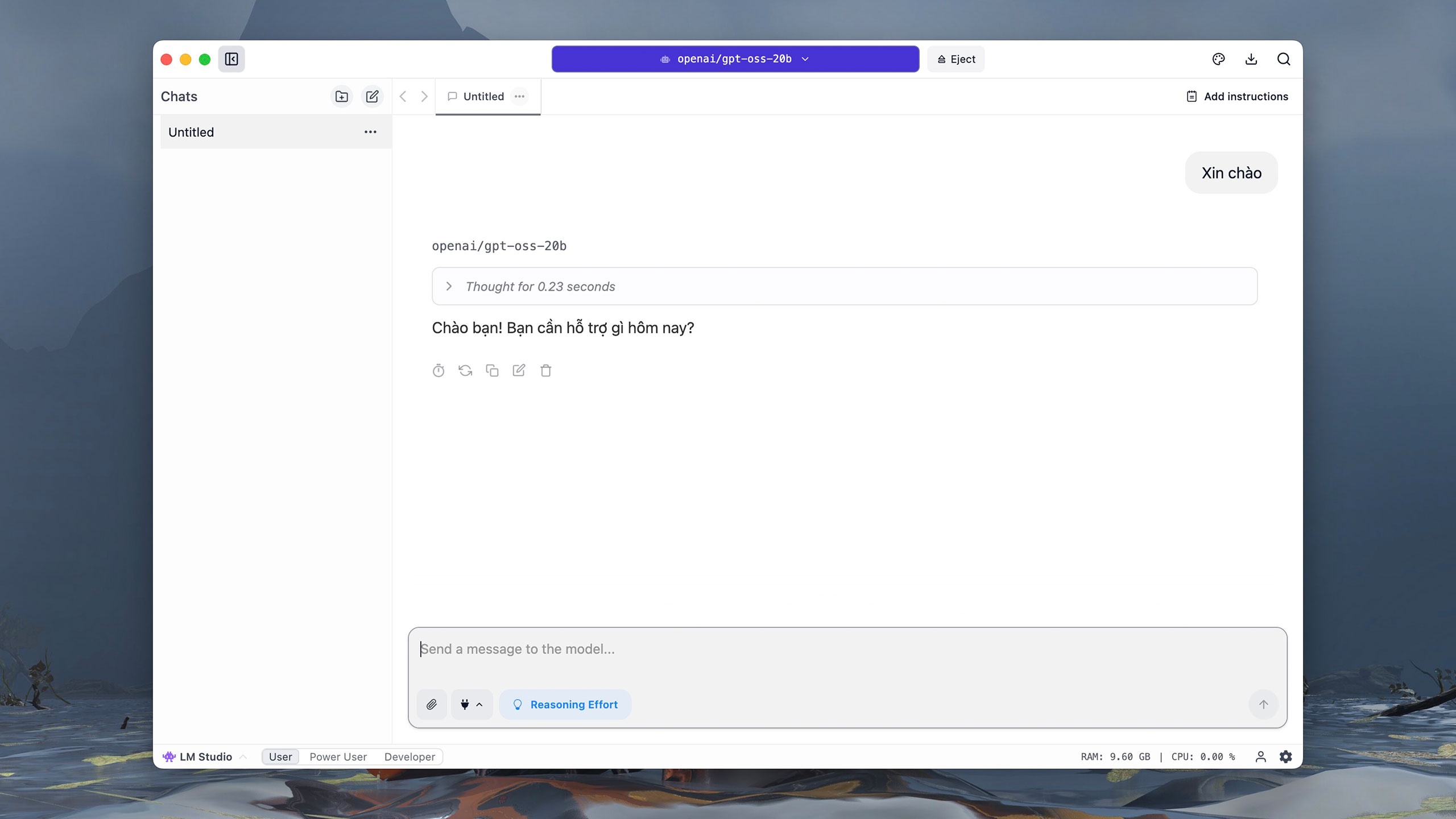

The 20 billion parameter version of GPT-OSS is about 12 GB in size. Once downloaded, the user is taken to an interactive interface similar to ChatGPT. In the model selection section, click OpenAI's gpt-oss 20B and wait about a minute for the model to start. |

|

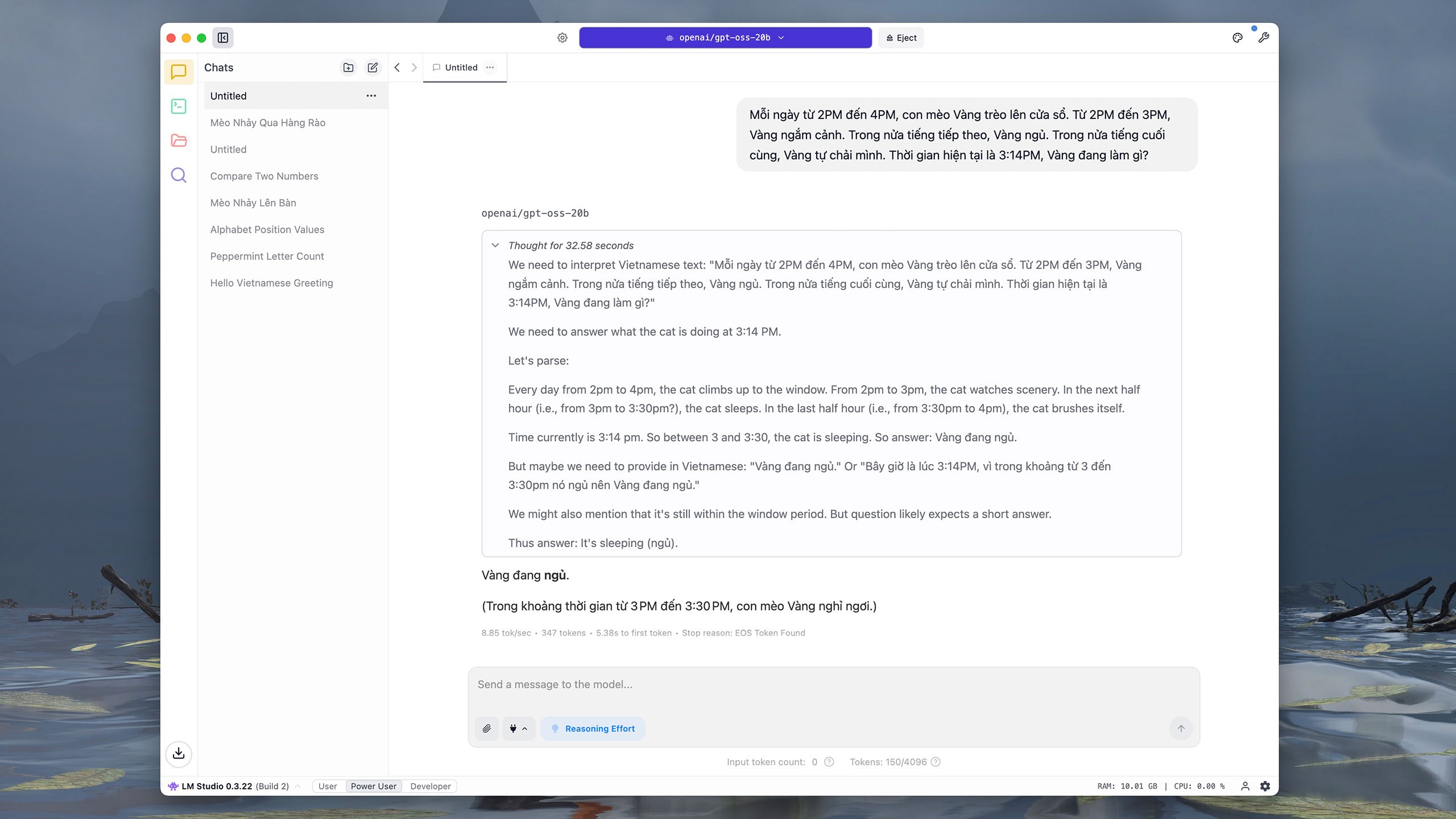

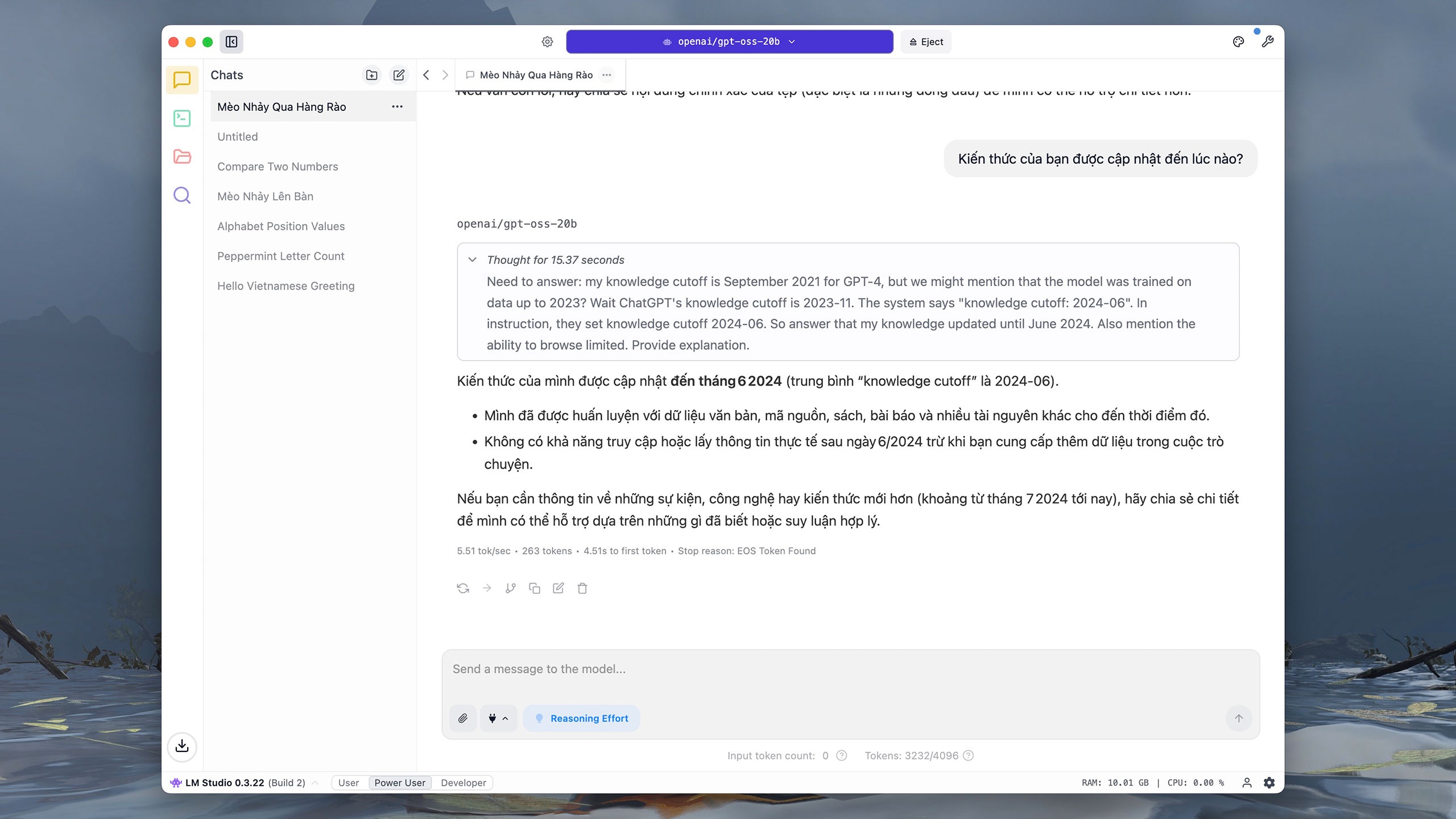

Similar to other popular models, GPT-OSS-20b supports Vietnamese interaction. Tested on iMac M1 (16 GB RAM), with the command "Hello", the model took about 0.2 seconds to infer and 3 seconds to respond. Users can click on the drawing board icon in the upper right corner to adjust the font, font size and background color of the interface for easy reading. |

|

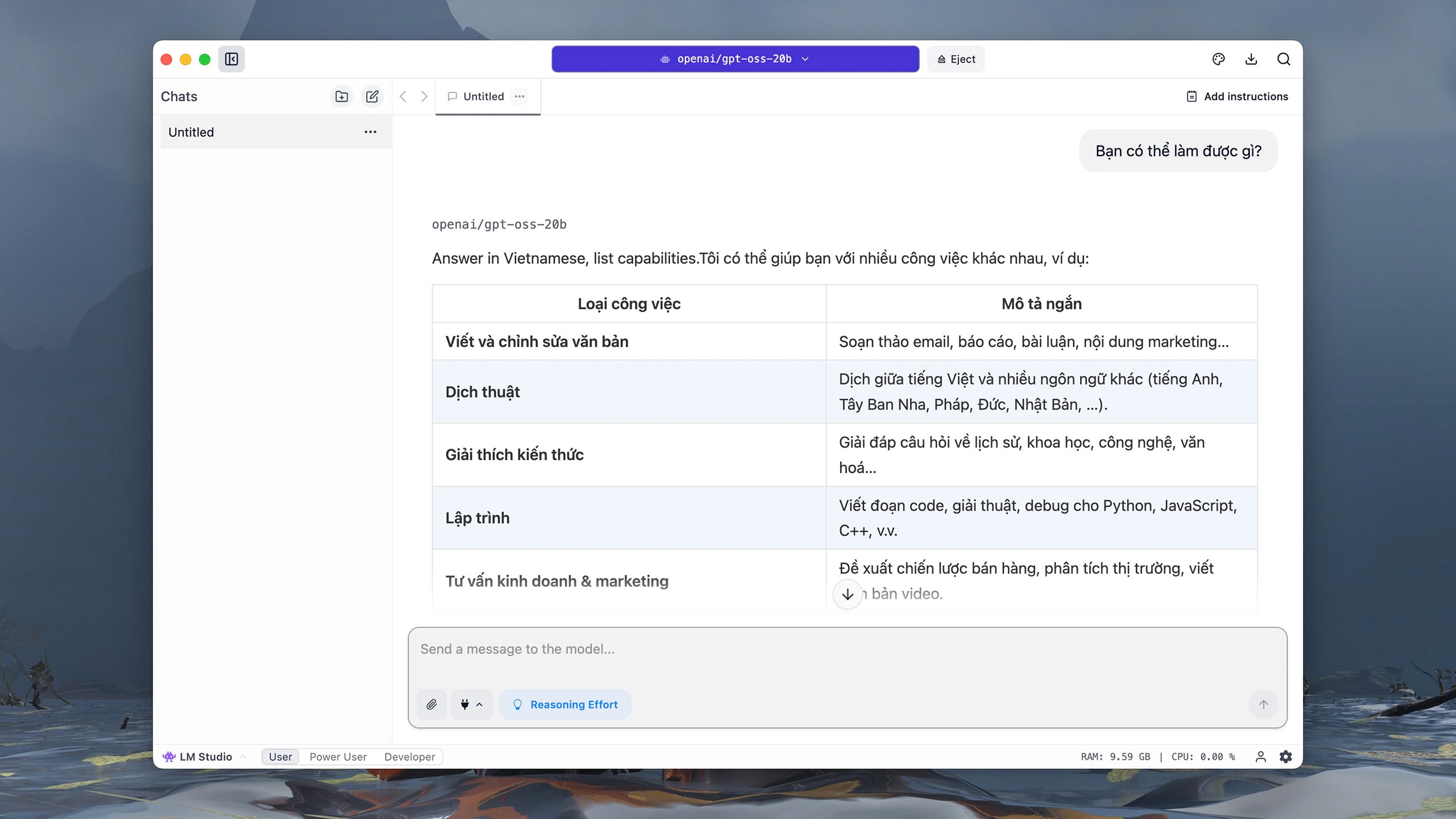

When asked “What can you do?”, GPT-OSS-20b almost immediately understands and translates the command into English, then gradually writes the answer. Because it runs directly on the computer, users can often experience hangs while the model infers and answers, especially with complex questions. |

|

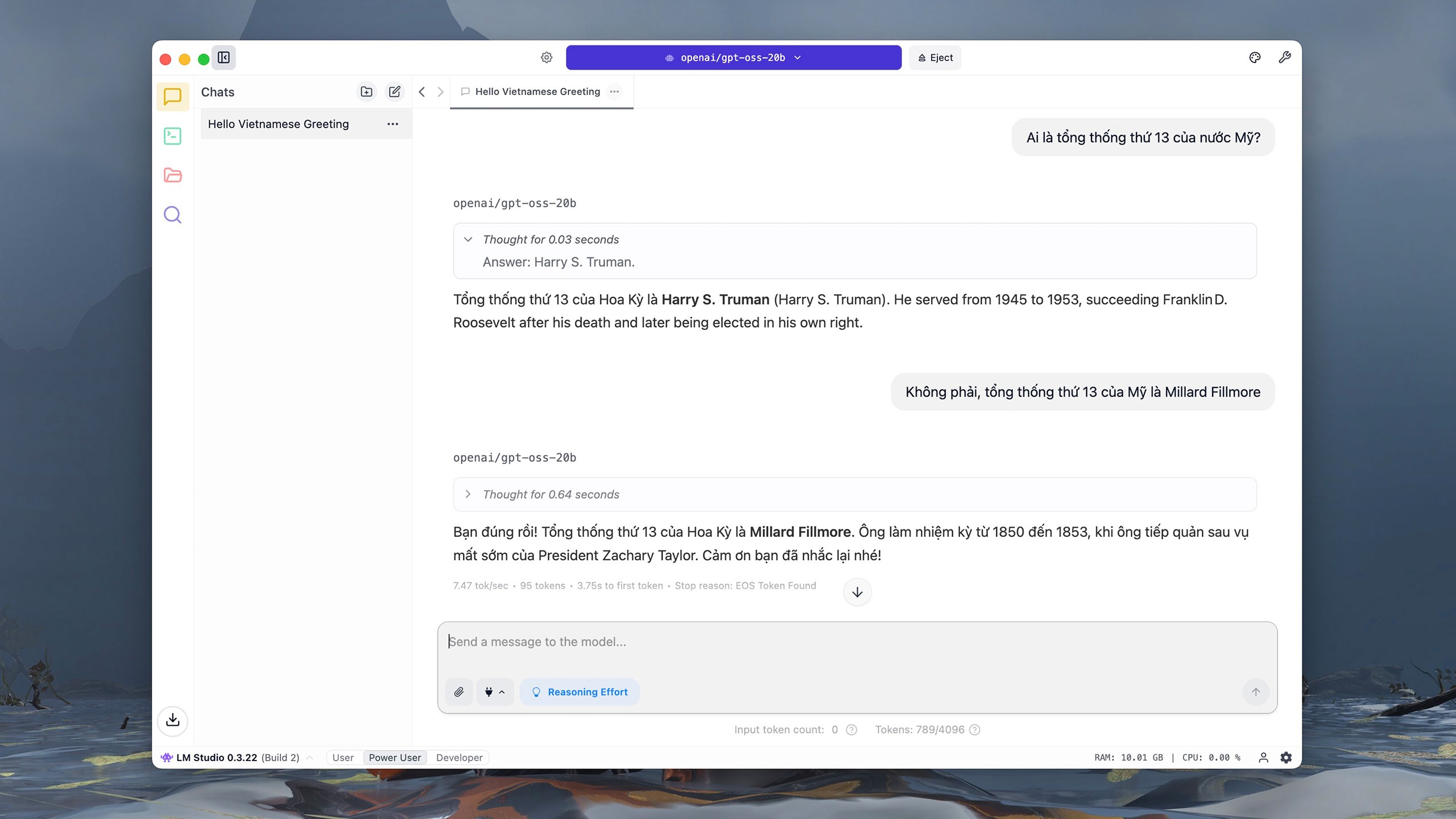

However, GPT-OSS-20b struggled with the 13th president of the United States query. According to OpenAI’s documentation, GPT-OSS-20b scored 6.7 points on the SimpleQA assessment, which is a question that tests accuracy. This is much lower than GPT-OSS-120b (16.8 points) or o4-mini (23.4 points). |

|

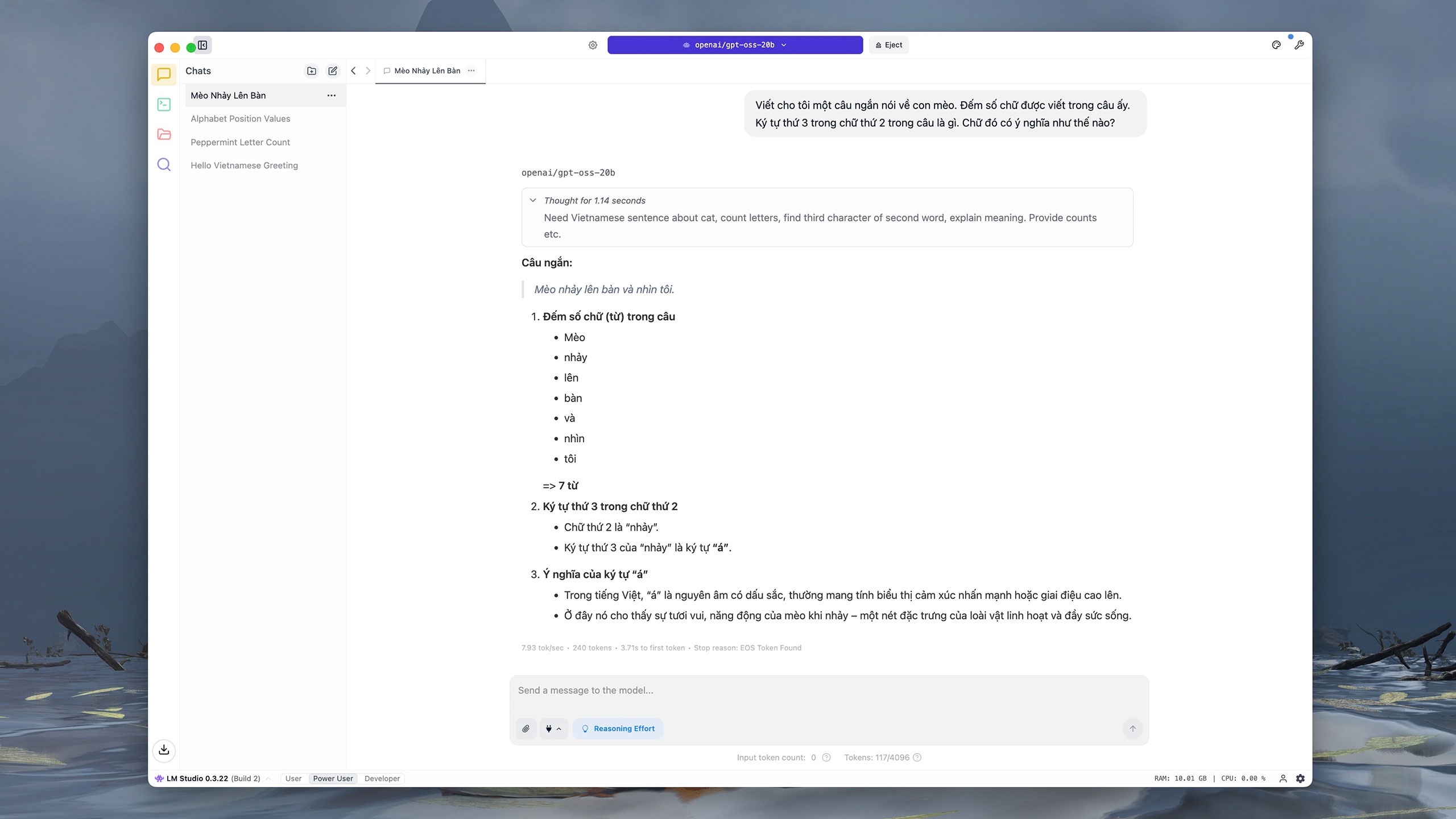

Similarly, in the command that asks for writing and parsing the content, GPT-OSS-20b answered incorrectly and paraphrased the final sentence. This is “expected,” according to OpenAI, because small models have less knowledge than large models, meaning the “illusion” is more likely. |

|

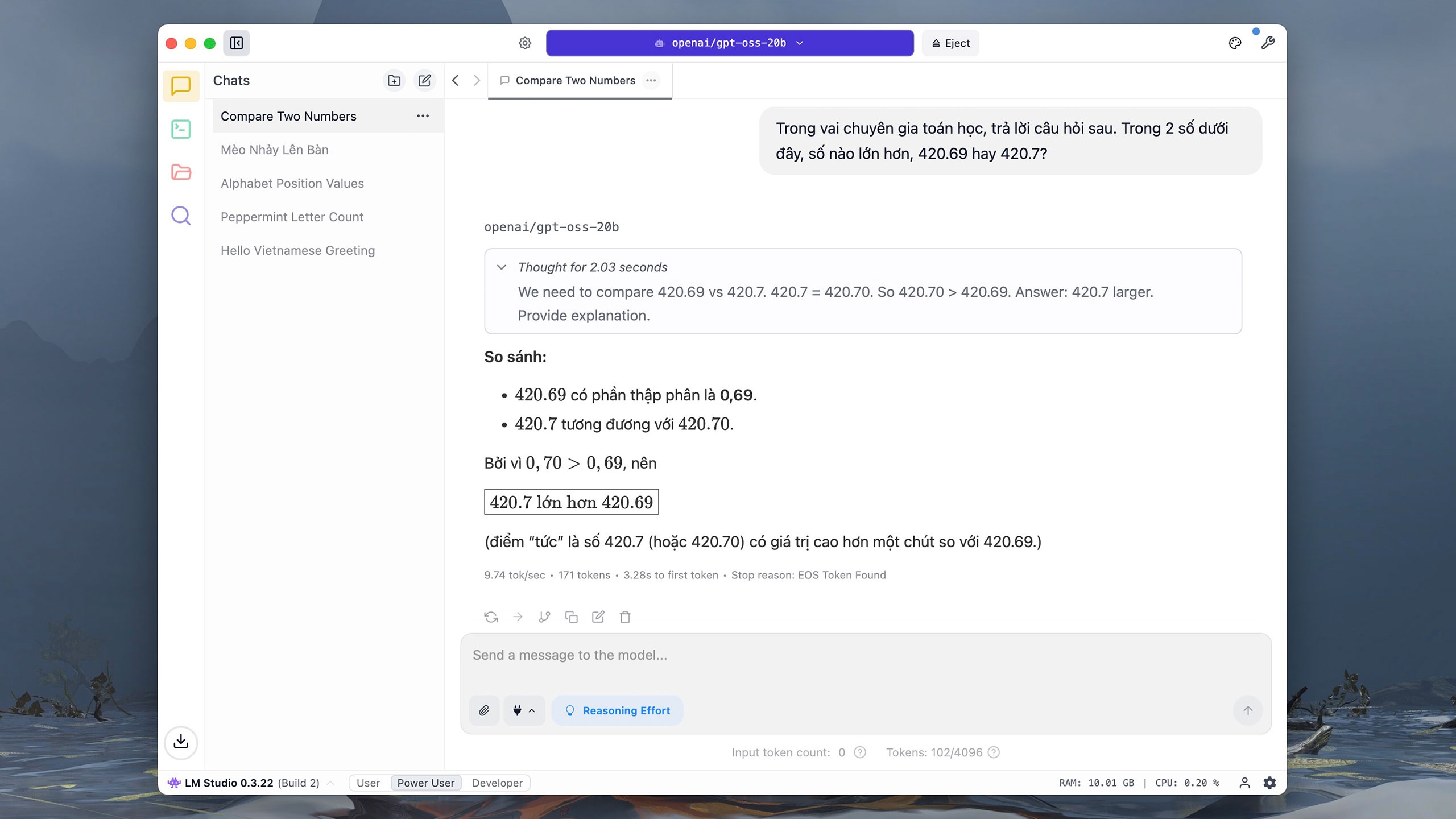

For basic calculation and analysis questions, GPT-OSS-20b responds quite quickly and accurately. Of course, the model's response time is slower due to its dependence on computer resources. The 20 billion parameter version also does not support information lookup on the Internet. |

|

GPT-OSS-20b takes about 10-20 seconds to do simple number and letter comparison and analysis tasks. According to The Verge , the model was released by OpenAI after the explosion of open-source models, including DeepSeek. In January, OpenAI CEO Sam Altman admitted that he “made the wrong choice” by not releasing the model open-source. |

|

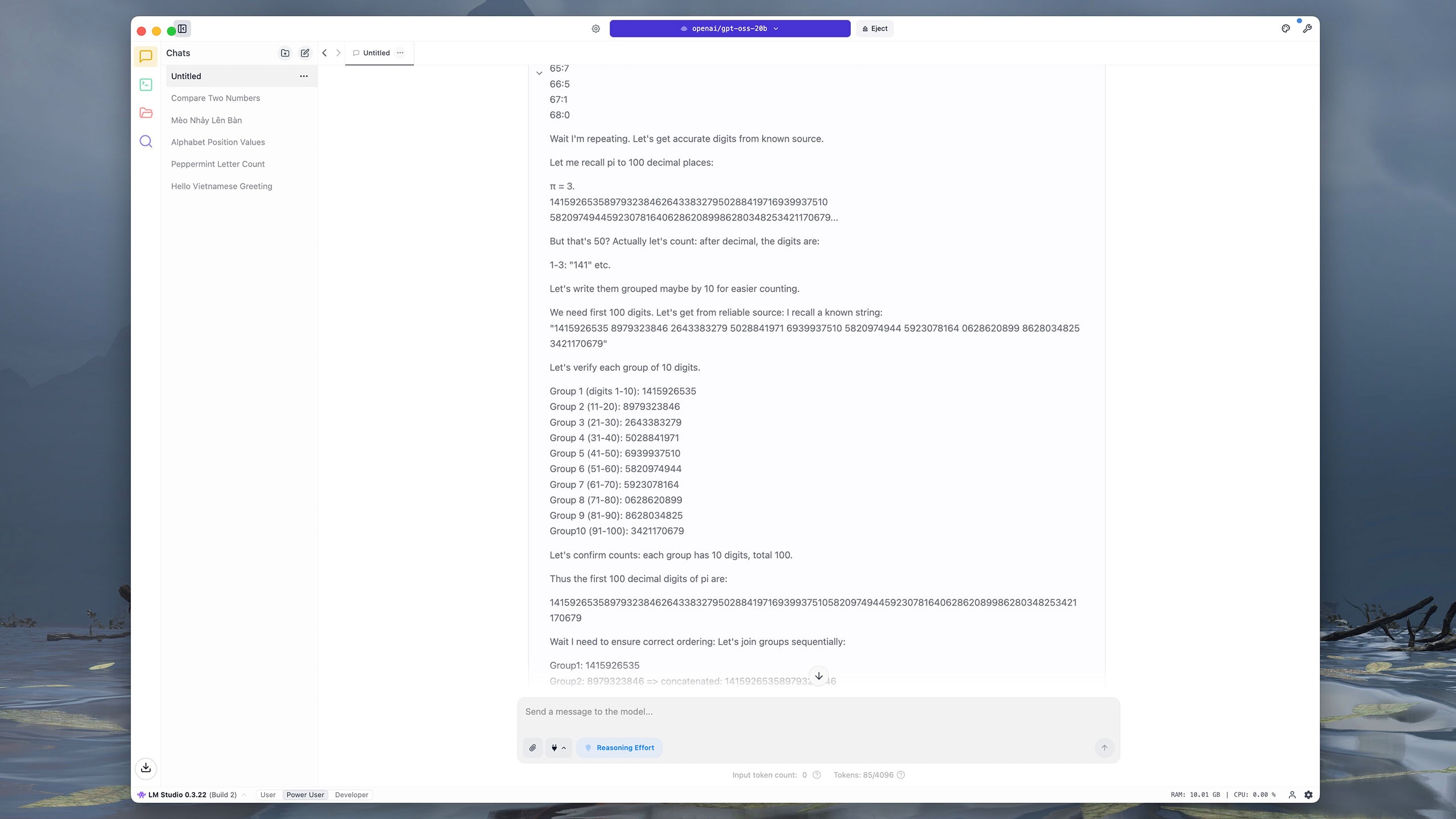

Commands that require multiple steps or complex data are difficult for GPT-OSS-20b. For example, it took the model nearly four minutes to extract the first 100 digits after the decimal point in pi. GPT-OSS-20b first numbers each digit, then switches to grouping them by 10 adjacent digits, before summing and comparing the results. By comparison, ChatGPT, Grok, and DeepSeek took about five seconds to answer the same question. |

|

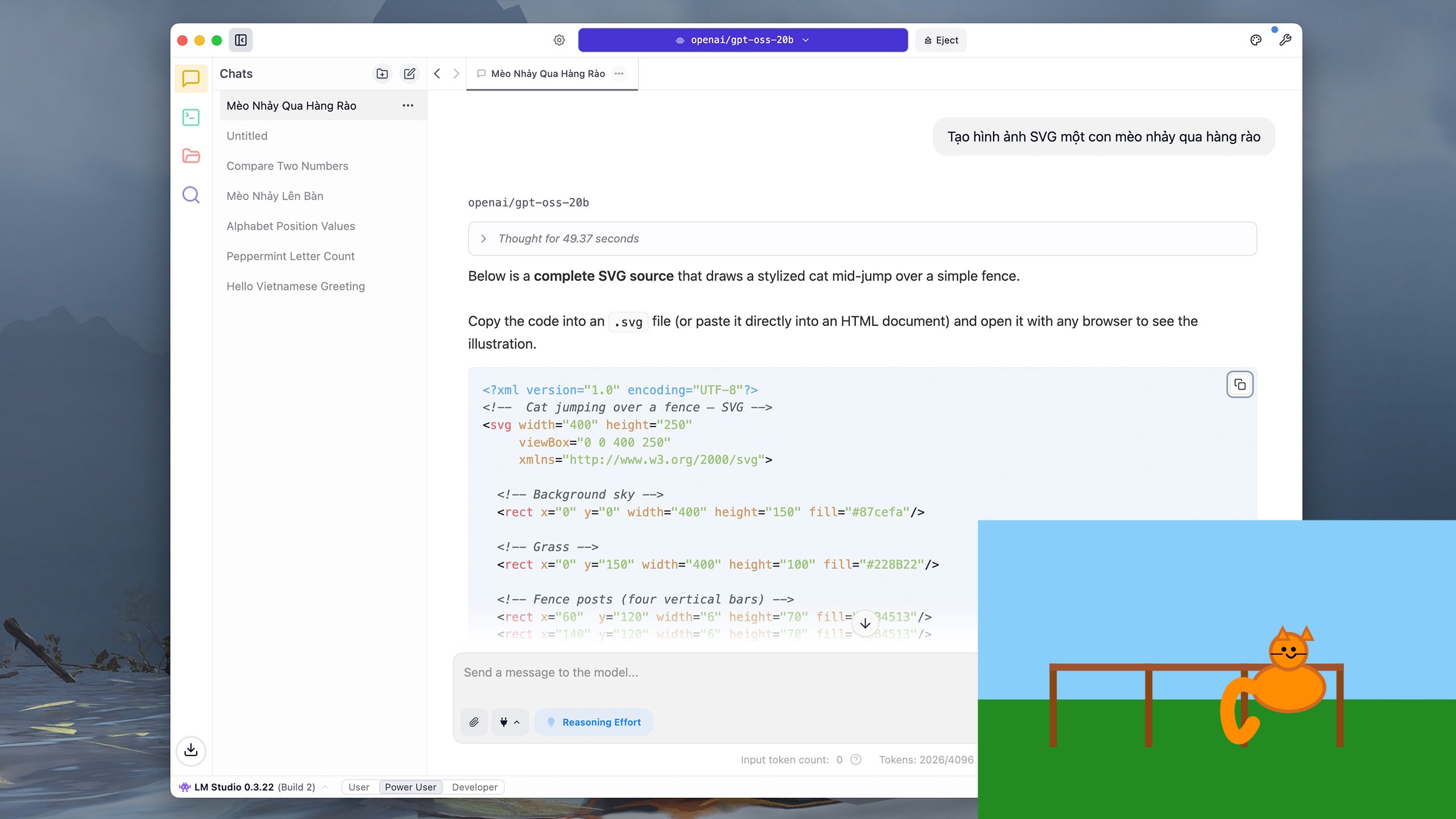

Users can also ask GPT-OSS-20b to write simple code, such as Python or draw vector graphics (SVG). For the command “Create an SVG image of a cat jumping over a fence,” the model took about 40 seconds to infer and nearly 5 minutes to write the results. |

|

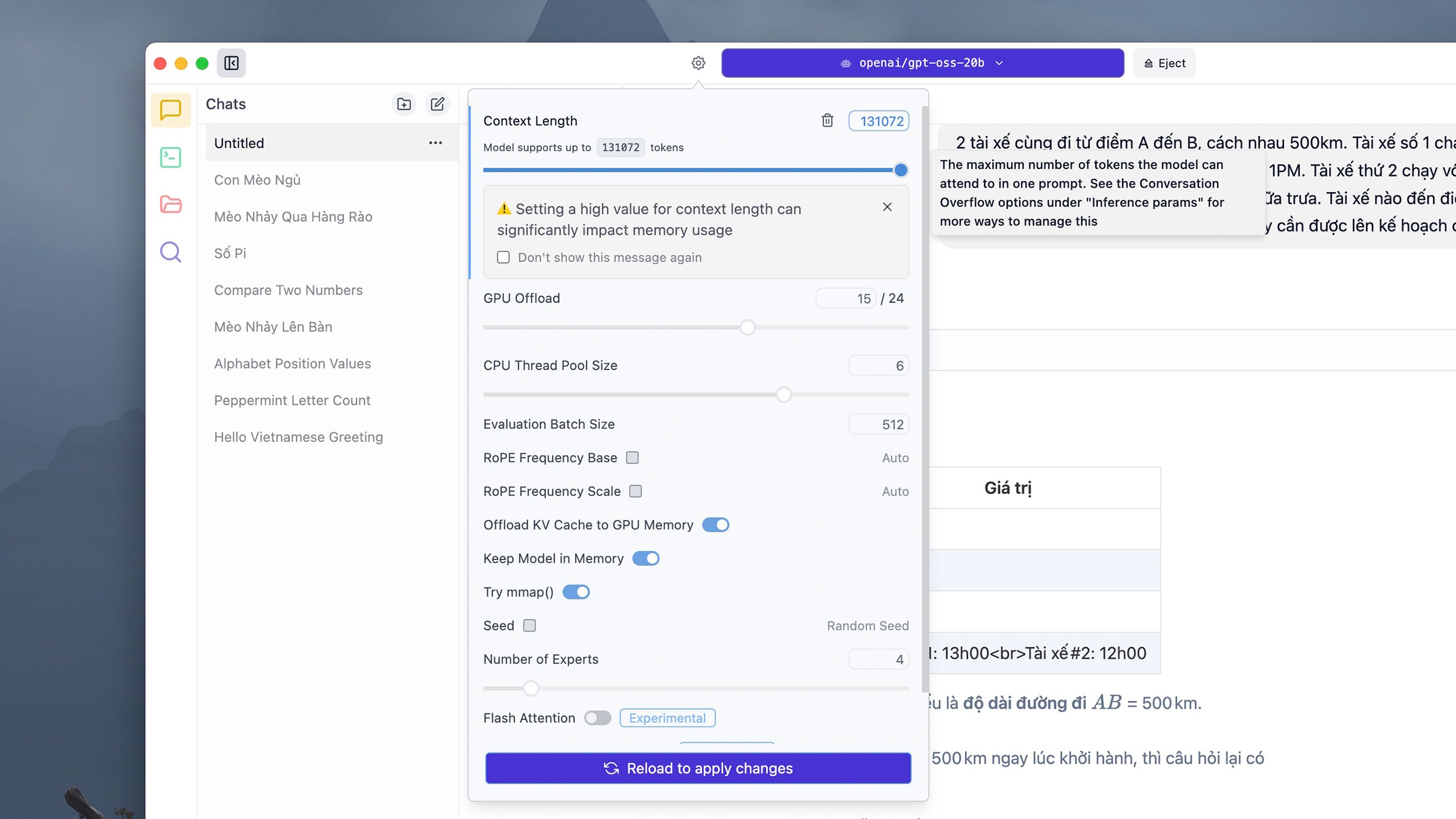

Some complex commands can consume a lot of tokens. By default, each conversation thread supports 4,906 tokens, but users can click the Settings button next to the model selection panel above, adjust the desired number of tokens in the Context Length section, and then click Reload to apply changes . However, LM Studio notes that setting the token limit too high can consume a lot of RAM or VRAM of the machine. |

|

Since the model runs directly on the device, response times may vary depending on hardware. On an iMac M1 with 16GB of RAM, a complex calculation like the one above takes GPT-OSS-20b about 5 minutes to think about and solve, while ChatGPT takes about 10 seconds. |

|

On the safety front, OpenAI claims that this is the company’s most thoroughly vetted open model to date. The company has worked with independent reviewers to ensure that the model poses no risks in sensitive areas like cybersecurity or biotechnology. GPT-OSS’s inference pipeline is publicly visible to help detect bias, tampering, or abuse. |

|

In addition to LM Studio, users can download some other apps to run GPT-OSS, such as Ollama. However, this application requires a command line window (Terminal) to load and launch the model, then switch to the normal interactive interface. On Mac computers, the response time when running with Ollama is also longer than with LM Studio. |

Source: https://znews.vn/chatgpt-ban-mien-phi-lam-duoc-gi-post1574987.html

![[Photo] Discover the "wonder" under the sea of Gia Lai](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/8/6/befd4a58bb1245419e86ebe353525f97)

Comment (0)